I am currently a second-year Master’s student at Nanjing University, R&L Group, supervised by Professor Yang Gao and Professor Jing Huo. Previously, I received my B.S. in Engineering from Xiamen University.

The goal of my research is to equip robots with versatile capabilities for open-world manipulation tasks, particularly in household settings. To achieve this, I focus on 1) utilizing foundation models and Internet-scale data to enhance robots’ environmental understanding, and 2) designing algorithms that enable adaptive and scalable generalization to diverse scenarios. I am particularly interested in vision-language-action models for robotic manipulation.

🔥 News

- 2025.01: 🎉🎉 Our GravMAD is accepted to ICLR 2025.

- 2025.01: One paper on Robotic World Model for Long-horizon Manipulation is released.

📝 Publications

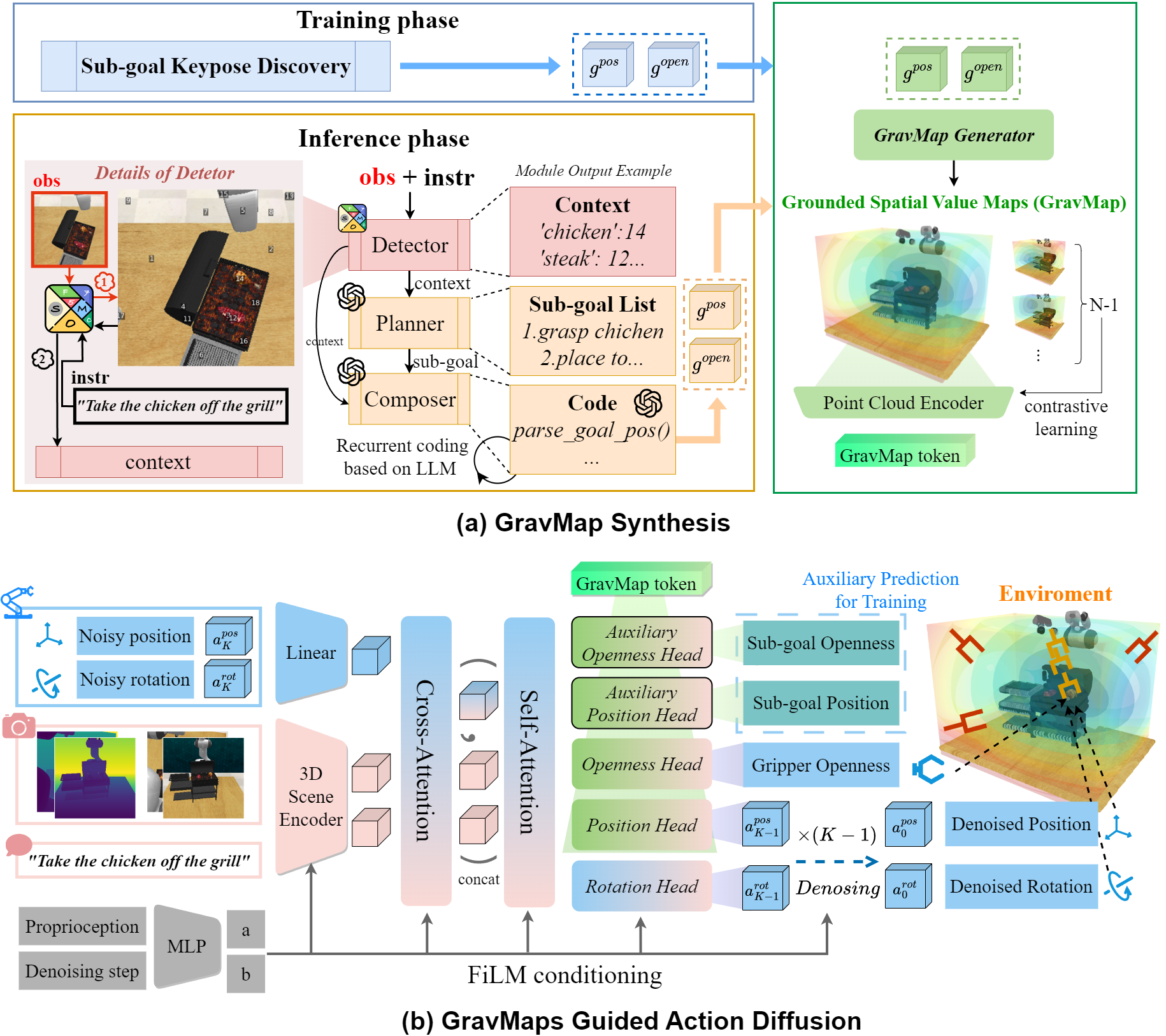

GravMAD: Grounded Spatial Value Maps Guided Action Diffusion for Generalized 3D Manipulation, Yangtao Chen*, Zixuan Chen*, et al.

- GravMAD introduces a sub-goal-driven, language-conditioned framework that combines imitation learning and foundation models for improved 3D task execution and generalization.

- Empirical results show that GravMAD outperforms existing methods by 28.63% on novel tasks and 13.36% on seen tasks, demonstrating its effectiveness in multi-task learning and generalization in 3D manipulation.

ICLR 2025GravMAD: Grounded Spatial Value Maps Guided Action Diffusion for Generalized 3D Manipulation, Yangtao Chen*, Zixuan Chen*, et al.

🎖 Honors and Awards

- 2023.11 Nanjing University Graduate Academic Scholarship

- 2024.11 Nanjing University Graduate Academic Scholarship

📖 Educations

- 2023.09 - 2026.06, Master, Nanjing University, Nanjing.

- 2019.09 - 2023.06, Undergraduate, Xiamen University, Xiamen.